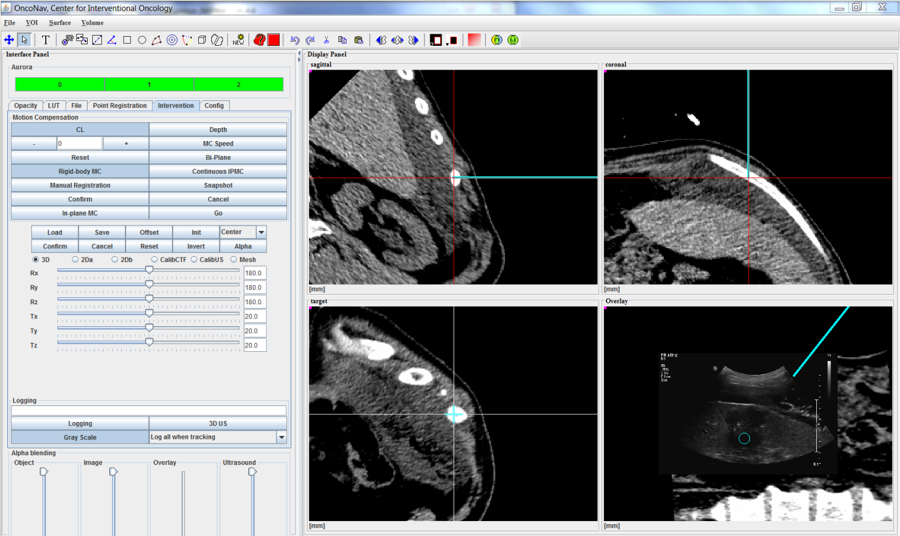

GPU 3D VISUALIZATION FRAMEWORK

PROJECT COLLABORATORS

NIH, Clinical Center, Center of Interventional Oncology:

Brad Wood (MD, Chief, Director), Sheng Xu (PhD)